In the long journey to make machines speak more like humans, we have focused on lexical accuracy, lifelike tone, and even vocal texture. Yet, we might have all overlooked a secret hidden between the lines. A secret that carries breath, emotion, and thought: the pause.

Today, we want to share a seemingly small but deeply human-centric advancement: We have enabled machines to understand human silence.

Where Does the "Mechanical Feel" of Machines Come From?

Imagine two versions of a voiceover:

- Version A: Flawless enunciation, delivered in one breath, information comes like rapid-fire, leaving not a single gap.

- Version B: It hesitates slightly at key moments, builds suspense before transitions; its rhythm itself tells you: "This is important," "Let's think," "The story is about to turn."

Which one moves you more? The answer is self-evident.

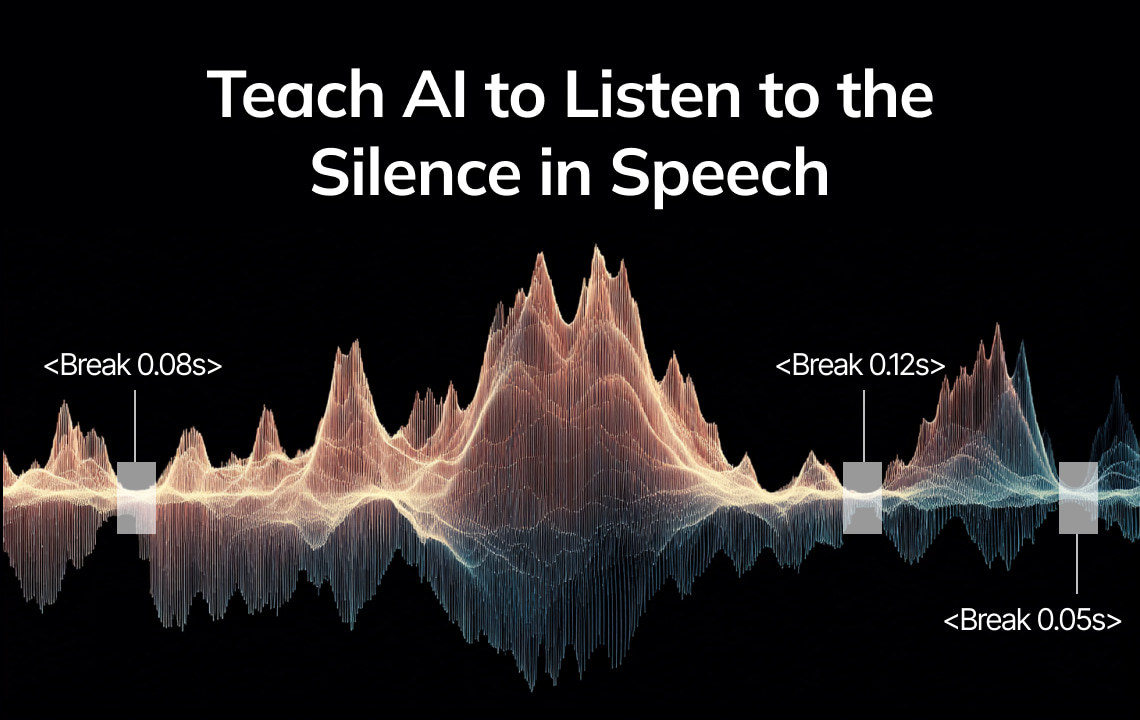

We are long accustomed to human speech not being a smooth line, but a mountainous soundscape with peaks, valleys, and breath. Those well-timed silences are the punctuation of speech, the carriers of emotion, and precious buffers for the listener's brain to digest information.

The "mechanical feel" of traditional machine voiceovers largely stems from this—they possess a good voice, but lack a heart that knows how to "breathe."

The "mechanical feel" of traditional machine voiceovers largely stems from this—they possess a good voice, but lack a heart that knows how to "breathe."

Beyond Punctuation: From "Grammar Rules" to the "Rhythm of Life"

An obvious idea might be: just have the machine follow punctuation rules. Pause longer for periods, shorter for commas.

But real-world speech is far more vibrant and complex than that.

- When excited, people might speak a whole passage in one breath, disregarding punctuation.

- When recalling or thinking, a pause might occur after any word, potentially where there is no comma.

- Different languages have inherent, different rhythms. The information load of ten Chinese characters might require a stream of swift Spanish notes to carry; while the rhythm of Japanese lies within the dense combination of Kana and particles.

Punctuation is merely the skeleton of text, while authentic pauses are the lifeblood flowing within the voice.

Our Answer: Replicating the "Breathprint" of Life

So, we changed our approach. We stopped commanding the machine to "guess based on rules," and instead guided it to "listen through data."

- Listening for Traces: We use Automatic Speech Recognition (ASR) technology. It not only outputs text but, more valuably, records the "birth" and "end" timestamps of each word on the timeline.

- Interpreting Silence: We examine the time intervals between words. Those unusually lengthened gaps are the genuine "breathing holes" left by the original speaker. This is not random emptiness; it is the prosody of language, the trace of thought.

- Cultural Adaptation: We calibrate the "sensitivity" of listening for different languages. For compact-rhythm languages like Chinese and Japanese, we capture more subtle pauses; for scriptio continua languages like Thai and Burmese, we use wider thresholds to perceive their more spacious rhythm.

In essence, we are translating the "life rhythm" of sound—those silences laden with hesitation, emphasis, recollection, and emotion—into labels that machines can understand, and then asking the TTS to reproduce it in audio.

A Simple Example to Feel the Weight of "Breath"

The original speaker might say:

"Our new model processes data in real time … (a natural inhalation and turn) … and delivers results within seconds."

A standard Text-to-Speech (TTS) would state it flatly:

"Our new model processes data in real time and delivers results within seconds."

After being "listened to" by our system, it generates:

"Our new model processes data in real time <break time="300ms"/>and delivers results within seconds."

"Our new model processes data in real time <break time="300ms"/>and delivers results within seconds."

These 300 milliseconds are not a cold algorithmic parameter. They are a moment of genuine silence "harvested" from the original audio, a thinking time the speaker left for you.

Why Insert Tags Instead of Stretching Audio?

Because we pursue "precision," not "homogenization." Like a skilled conductor who lifts the baton only at the end of a musical phrase, rather than slowing down the entire movement equally. Inserting

<break> tags allows us to:- Exercise Fine Control: Inject silence exactly where it's truly needed.

- Ensure Absolute Reliability: Guarantee each pause is within a reasonable range, avoiding unnatural long gaps.

- Maintain Broad Compatibility: Enable this understood "silence" to be accurately "performed" by major TTS engines.

Guarding This "Understanding," Preventing Misuse

Any sophisticated mechanism needs robust guardrails. Therefore, we have implemented multiple safeguards:

- Choosing Humility when Uncertain: If the speech recognition quality is poor, we prefer not to add any pauses rather than implant incorrect "breaths."

- Filtering False "Silence": Identify and ignore unnaturally long intervals caused by recognition errors.

- Protecting Output Purity: Cleanse any extraneous formats that might be accidentally added, ensuring the final instruction is clear.

- Quantifying "Naturalness": Standardize pause precision to 10ms, avoiding unnatural micro-jitters, making the rhythm smooth and stable.

Ultimately, What Difference Have We Achieved?

When machines learn silence, the change is subtle yet palpable.

- When telling a story, it gains a sense of suspense.

- When expressing an opinion, it gains a sense of power.

- When laying out descriptions, it gains a sense of imagery.

Users might not articulate the technical principles precisely, but their feedback points to the most essential feeling: "This voice sounds more real." "It doesn't feel rushed; it's comfortable."

This "naturalness" lies precisely within those perfectly replicated, seemingly insignificant silences.

An Analogy

If we compare a sentence to a path, with words as the paving stones, then the time between words is the spacing between the stones.

What we do is observe where the spacing has been naturally widened—those are the "breathing points" of the speaker's mind—and then, right there, we lay down a soft carpet of time (a

What we do is observe where the spacing has been naturally widened—those are the "breathing points" of the speaker's mind—and then, right there, we lay down a soft carpet of time (a

<break> tag), allowing the voice to pause and breathe there.The Road Ahead

This is just the beginning. In the future, we aspire for machines not only to replicate silence but to understand the emotion behind it—is it the hesitation of doubt, the solemnity after emphasis? The negative space before a transition, or the speechlessness of being moved?

We dream that it can intelligently adjust this "silence" based on the cultural rhythm of the target language, so that the translated voice is not only accurate in meaning but also harmonious in prosody.

Thus, what the machine produces is no longer just a correct sequence of words, but begins to possess the rhythm of life.

We have enabled machines to understand the unspoken parts of speech.

VMEG Video Translator

Discover how AI learns to perceive Silence in Speech through silent-speech-recognition and rhythm modeling. Training machines to capture human pauses, emotion, and breath.