When precise German meets concise Chinese, or when the lyrical flow of Spanish encounters the reserved tone of Japanese — the efficiency gap between languages begins to reshape how we experience translated videos.

The Hidden Challenge of Global Video Translation

In today’s global media landscape, viewers often encounter a subtle dissonance:

the dubbed voice finishes too early or lags behind the visuals, breaking immersion.

Behind this lies an elegant linguistic truth — different languages transmit information at different rates.

The “Speed Game” of Human Language

A 2019 study in Science Advances (Coupé et al.) revealed that human languages have evolved toward a natural equilibrium.

Despite vast differences in information density — how much meaning is packed per syllable — most languages maintain a similar transmission rate of ~39 bits per second.

Despite vast differences in information density — how much meaning is packed per syllable — most languages maintain a similar transmission rate of ~39 bits per second.

In essence:

- High-density languages (e.g., Chinese, Vietnamese) express more meaning per syllable → slower pace.

- Low-density languages (e.g., Spanish, French, Japanese) require more syllables → faster speech.

- Medium-density languages (e.g., English, German) balance the two extremes.

“Languages are like vehicles — some take large, slow strides, others smaller, quicker ones — yet all move forward at roughly the same speed.” — The Economist (2019)

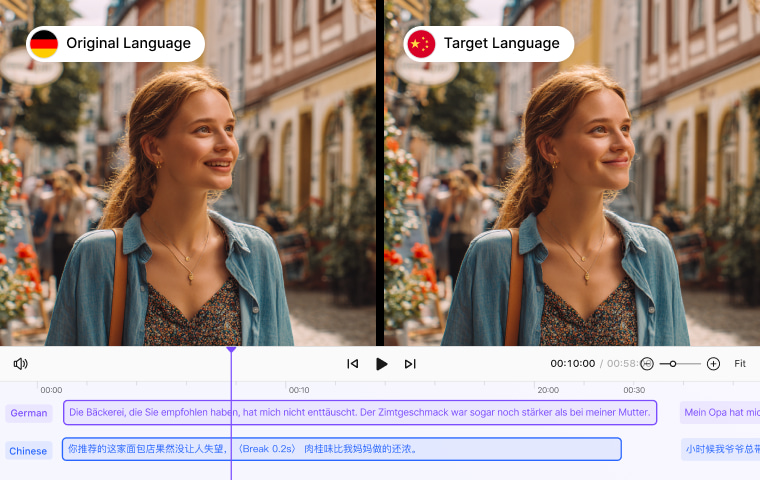

A Breakthrough: Dynamic Visual Pace Adjustment

Traditional dubbing systems focus on translation accuracy, ignoring rhythm differences.

Our Dynamic Visual Pace Adjustment system redefines synchronization: it analyzes information density between source and target languages and dynamically adjusts playback speed to ensure alignment as perfect as possible.

Our Dynamic Visual Pace Adjustment system redefines synchronization: it analyzes information density between source and target languages and dynamically adjusts playback speed to ensure alignment as perfect as possible.

Core Mechanism and Decision Logic

| Density Tier | Linguistic Traits | Example Languages |

| High | Compact, meaning-rich | Chinese (Mandarin, Cantonese), Vietnamese |

| Medium | Balanced structure | English, German, Korean, Russian |

| Low | Longer utterances, faster | Spanish, French, Italian, Japanese |

Rules (When you enable "Allow Adjustments to Video Speed" in your video translation task):

- Stretch when needed: From high/medium → low density (e.g., Chinese → Spanish, English → Japanese) or from high-density to medium-density (e.g., Chinese → English). → Slightly slow playback for longer translated speech.

- Keep pace: From low → high/medium (e.g., French → Chinese, Japanese → English). → Maintain original speed to avoid lag.

- Same-tier translation: Keep the natural rhythm unchanged.

A Subtle but Transformative Experience

- Seamless A/V synchronization — lip movements (When "Lip-Sync" is enabled after being translated) and emotions match naturally.

- Adaptive multilingual support — optimal pacing for each language pair.

- Context-aware optimization — ideal for documentaries, lectures, and corporate presentations.

Real-world Scenarios

1. Chinese Documentary → Spanish Dub

Issue: Translated narration takes ~30% longer.

Fix: Slightly slow down playback.

Result: Equal pacing and flow.

2. French Film → Chinese Dub

Issue: Chinese is more concise.

Fix: Keep original speed.

Result: Natural rhythm, no dead air.

3. English Tutorial → Japanese Version

Issue: Japanese has lower information density.

Fix: Mild visual stretching.

Result: Smooth, relaxed delivery.

Scientific Foundations

- Cross-linguistic research on 17 languages and 170 native speakers;

- Over 240,000 syllables analyzed from real speech;

- Model parameters continuously refined with new linguistic evidence.

Future Outlook

- Context-sensitive pacing — adjust based on genre (dialogue, narration, poetry, etc.);

- Personalized learning — adapt to user preferences;

- Real-time synchronization — dynamic tempo tuning within scenes.

Conclusion

In an age of global communication, true innovation comes not only from engineering but from understanding human diversity.

Dynamic Visual Pace Adjustment represents both — a marriage of linguistic insight and intelligent design.

When technology adapts to language diversity — rather than forcing humans to adapt to machines — we move toward a more inclusive and intelligent digital future, where every language can be heard, understood, and appreciated in its own rhythm.

References

- Coupé, C., Oh, Y. M., Dediu, D., & Pellegrino, F. (2019). Different languages, similar encoding efficiency: Comparable information rates across the human communicative niche. Science Advances, 5(9).

- Why are some languages spoken faster than others? (2019). The Economist.