People often mix the terms closed captions and subtitles, which are text lines at the bottom of a screen. Actually, they are not the same.

Understanding the difference between closed captions and subtitles matters. It decides how to use them for accessibility compliance, user experience, viewer retention, searchability, and global reach.

Understanding the difference between closed captions and subtitles matters. It decides how to use them for accessibility compliance, user experience, viewer retention, searchability, and global reach.

This article explains what they really mean, from definitions to criteria to choose, breaking down their functional and technical differences. By the end, you will know how to select the correct option for your video localization and audience goals.

What Are Closed Captions

Closed captions (often abbreviated as CC) are on-screen text that represents everything you hear in a video, displayed in the same language as the audio.

Captions cover dialogue and non-speech sounds like [door creaks] and [applause]—they’re designed to make the full audio experience accessible.

Captions cover dialogue and non-speech sounds like [door creaks] and [applause]—they’re designed to make the full audio experience accessible.

Captions are mainly used by people who are Deaf or hard of hearing, but they’re also useful when watching a muted video, or when the sound is unclear in a noisy café or library.

Key things to know about closed captions:

- Same language as the audio.

- Include more than speech.

- Add context.

- A separate track.

- Regulated in some places.

- Style (Keep lines short and use brackets for extra sounds).

What Are Subtitles

Subtitles are also time-synced text. The goal is to help viewers understand speech, which is crucial for localization and reaching international audiences. Subtitles transcribe and translate the spoken dialogue only, not every sound.

Unlike captions, subtitles assume the viewer can hear the rest of the audio. For example, you’ll see the translation of what a character says, but you won’t usually see notes like [laughter] or [music playing]—unless the style is specifically “SDH” (Subtitles for the Deaf and Hard of Hearing).

Key things to know about subtitles:

- Same or different language.

- Dialogue-focused.

- Global use.

- Optional or burned in.

- Translation and clarity.

Closed Captions vs. Subtitles: Key Differences

In short, Captions replace sound, while subtitles translate words. You can differentiate them by their purpose, audience, etc.

Purpose

- Closed captions exist to make video content accessible and act as a replacement for audio. If you can’t hear the audio, captions give you everything you need to follow along.

- Subtitles help language comprehension and act as a supplement to the audio. They help people who can hear the audio but don’t understand the spoken language (or prefer to read along).

Audience

- Captions: Viewers who are Deaf, hard of hearing, or simply watching in “sound-off” mode.

- Subtitles: Hearing viewers watching content in an unfamiliar language or accent.

Content Scope

- Captions include dialogue plus background noises, music, and tone cues. Usually appear as: [door creaks], [laughter], [somber music fades].

- Subtitles usually stick to dialogue only—unless they’re specifically SDH (Subtitles for the Deaf and Hard of Hearing).

Language

- Captions almost always stay in the original spoken language.

- Subtitles can be either the same language (for clarity) or translated into another language.

Regulations

- Captions are often required by law (in the U.S., the FCC, ADA, Section 508, and WCAG all come into play). This applies across TV, streaming, education, and government content.

- Subtitles are usually optional, driven by market demand. Some regions have localization policies that mandate them.

User Expectations

- Captions: Viewers expect a complete representation of the audio. Miss a lyric, scream, or sarcasm note, and accessibility suffers.

- Subtitles: Viewers expect clear, well-timed, culturally accurate text.

Closed Captions vs. Subtitles: Processes

Both closed captions and subtitles basically have the same workflow, but their goals shape the details. Captions lean on precision and compliance, while subtitles lean on readability and cultural nuance.

Step 1. Source Preparation

- Captions work from the final video cut and full audio mix, including dialogue and non-speech sounds.

- Subtitles work from the final video cut and dialogue track.

Step 2. Transcription

- Captions create a transcript word-for-word. Add speaker labels and sound descriptions.

- Subtitles start with a transcript or translation.

Step 3. Timecoding

Sync dialogue and sounds with the video. Non-speech cues may appear slightly after the sound

Sync text with dialogue, often timing it to appear just before or exactly as speech begins.

Step 4. Sound Annotation

Add bracketed cues like [door slams], [applause], [somber music].

Usually not included unless creating SDH (Subtitles for the Deaf and Hard of Hearing).

Step 5. Translation

- This is optional for captions, only if multi-language captions are needed.

Step 6. Quality Check

- Accuracy of closed captions follows character and timing, and meets legal standards.

- For subtitles, translation fidelity, reading speed, line breaks, and timing accuracy are necessary to check.

Step 7. Formatting & Export

- Captions deliver in regulated formats like SCC (broadcast) or WebVTT/TTML (web/OTT).

- Subtitles are commonly exported as SRT, WebVTT, or styled formats (ASS/SSA).

Step 8. Integration

- Captions are delivered as a separate track embedded in the video container or streaming manifest.

- Subtitles have the same embedding process, often with multiple language tracks for localization.

Similarities: They all start with the final video, rely on transcripts, get synced to the timeline, and end up exported as text tracks for playback.

The big differences are Transcription, Sound Annotation, and Translation. Captions are all about accessibility—covering every sound, not just dialogue, and often subject to regulation. Subtitles are about language access on spoken words, with translation and cultural adaptation playing a central role.

Closed Captions vs. Subtitles: How to Choose

When to Use Closed Captions

Closed captions are essential when the purpose is accessibility, boosting engagement, or for watching muted.

For example, if you’re producing a public-facing online course, corporate training video, government resource, or broadcast content, captions are often needed. They’re also a smart choice for social media videos or workplace tools, where many people watch muted.

For example, if you’re producing a public-facing online course, corporate training video, government resource, or broadcast content, captions are often needed. They’re also a smart choice for social media videos or workplace tools, where many people watch muted.

When to Use Subtitles

Subtitles are necessary in multilingual contexts. They’re the go-to option for localizing films, e-learning courses, or global streaming releases.

Choose subtitles when you need to preserve the original audio—whether that’s a nuanced performance, a distinctive voice, or subtle tone—while making sure the dialogue is understood. It's helpful for creators to break language barriers and reach a wider audience.

Choose subtitles when you need to preserve the original audio—whether that’s a nuanced performance, a distinctive voice, or subtle tone—while making sure the dialogue is understood. It's helpful for creators to break language barriers and reach a wider audience.

When to Use Both

In many cases, captions and subtitles work best together. For example, you might need English captions to meet accessibility requirements while also offering translated subtitles for international audiences.

Quick Comparison Table of Closed Captions vs. Subtitles

Aspect | Closed Captions (CC) | Subtitles |

Purpose | Make content accessible by fully representing the original audio | Aid comprehension as a supplement to the original audio |

Audience | Deaf, hard-of-hearing, or muted viewers | Hearing viewers who don’t understand the language |

Target Content | All sounds, including dialogue, music, tone, and speaker cues | Dialogue only |

Language | Usually, the same as the original audio | Same or translated |

Use Case | Accessibility, engagement, and learning retention | Localization, international distribution, and accent clarity |

How to Generate Closed Captions and Subtitles

With AI tools like VMEG, creating videos with closed captions and subtitles is easier than ever.

VMEG AI is a comprehensive localization platform that offers powerful tools for generating both closed captions and subtitles, designed to enhance accessibility and expand audience reach.

VMEG AI is a comprehensive localization platform that offers powerful tools for generating both closed captions and subtitles, designed to enhance accessibility and expand audience reach.

Key Features

- Auto-Generate Subtitles and Captions: Instantly create subtitles or captions by uploading your video.

- Multilingual Support: Generate subtitles and captions in over 170 languages and dialects, including English, Spanish, Arabic, Chinese, Japanese, and more.

- Customizations: Edit subtitles, adjust timing, and adjust style.

- Voice Cloning and Lip Sync: Utilize VMEG's voice cloning and lip-sync technology to maintain consistent voice and tone across different languages.

- Multi-Speaker Detection: Automatically identify and label multiple speakers in your video, enhancing clarity and context.

How to Generate Subtitles with 3 Steps

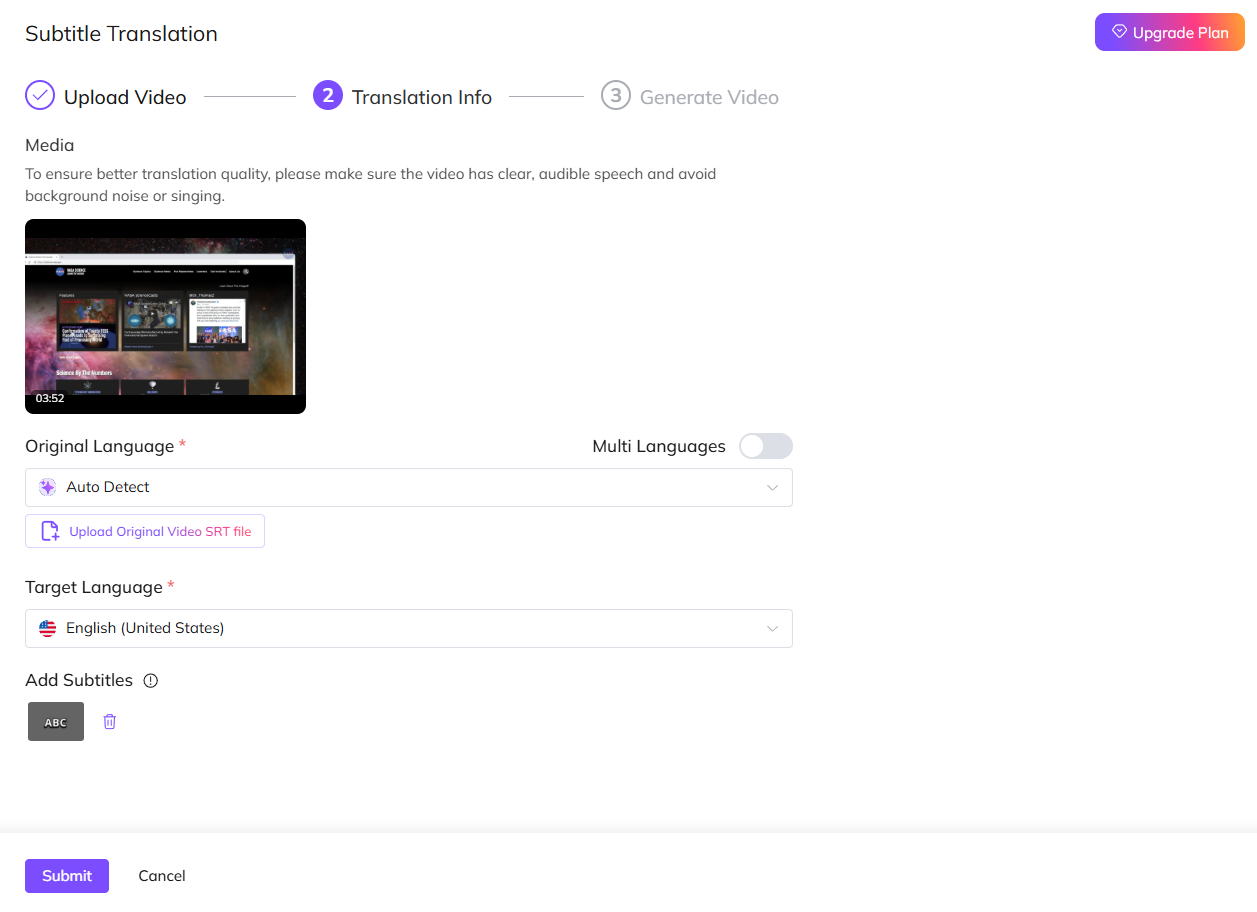

Step 1. Upload Your Video

Step 2. Select Original and Target Language

Select the original and target language you need to generate. Choose the number of speakers and submit.

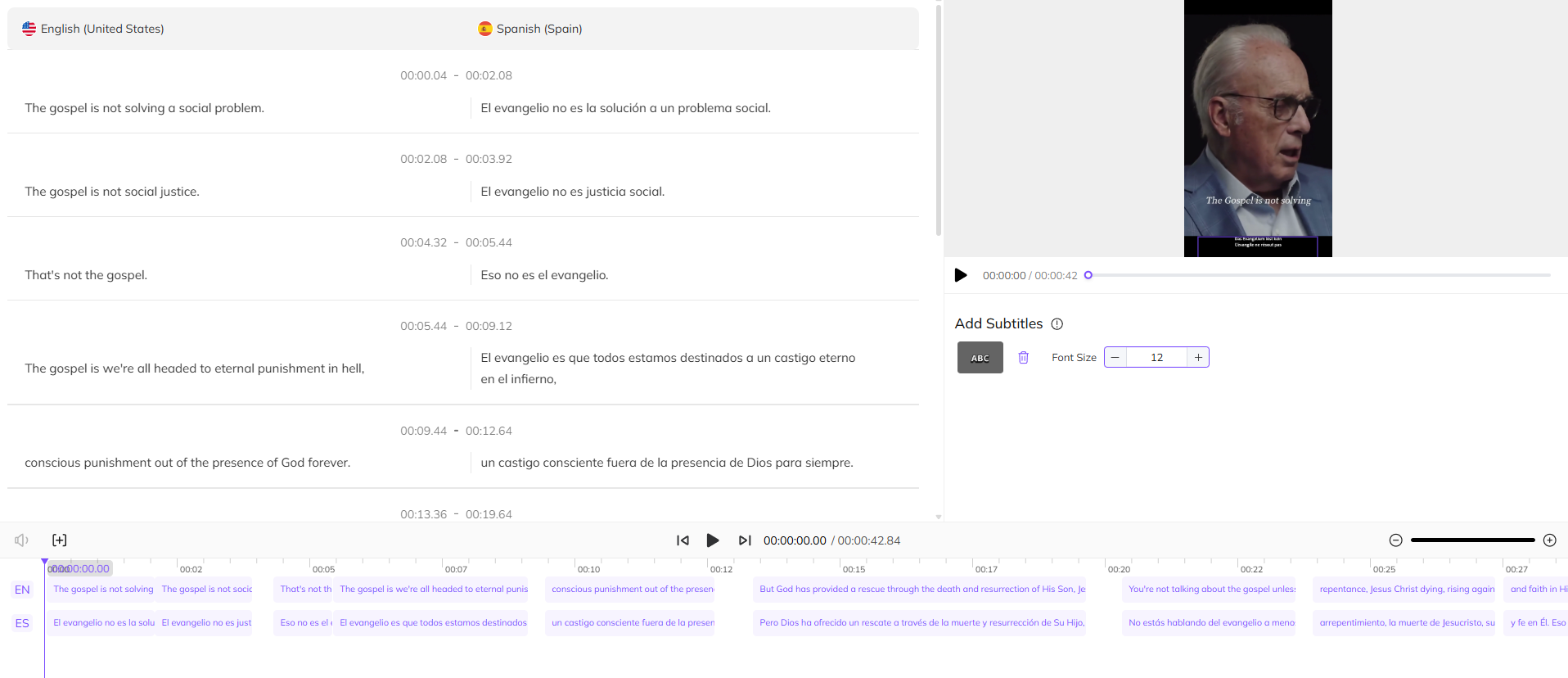

Step 3. Edit Subtitles and Export

When you get the translated result, check the accuracy and adjust the subtitles' style. Once done, click the Download button to get the edited video with embedded subtitles.

FAQs about Closed Captions vs. Subtitles

Are closed captions the same as subtitles?

No. Captions include all audio information; subtitles focus on spoken dialogue, often in a different language.

What are open captions?

Open captions are captions permanently burned into the video, which cannot be turned off. Closed captions exist as a separate selectable track that can be turned off.

Should I create captions first or subtitles first?

Captions first. A high-quality source transcript with sound context is the first step for efficient translation and subtitle adaptation.

Which is better, subtitles or captions?

Captions make your videos accessible by showing every sound and dialogue, while subtitles help viewers understand the language without losing the original audio. If you want maximum reach, offering both is the smartest move.

Is there a difference between transcription and captioning?

Transcription converts audio to text (raw). Captioning adds timing, segmentation, and non-speech annotations.

Conclusion

Closed captions and subtitles may look similar at first glance—both appear as text on screen—but they serve very different purposes. This post discussed the key differences and similarities between them, including purpose, process, etc.

Choosing the right option according to your needs—accessibility, user experience, engagement, and even global reach. For many creators, the best approach is a combination of captions for accessibility and subtitles for translation.

Generate Subtitles or Captions Easily

With support for over 170 languages, VMEG's AI-driven platform provides accurate, time-synced captions and subtitles tailored to your needs.